Is Artificial Intelligence a Threat to Humanity?

Artificial Intelligence (AI) is growing faster than ever in 2025. From smartphones to hospitals and from classrooms to battlefields, AI is reshaping our daily lives in ways we could only imagine a decade ago. This rapid expansion has sparked a global debate with one pressing question: Is artificial intelligence a threat to humanity? While AI has the power to revolutionize industries and improve lives, it also carries risks that could be harmful if ignored. This article explores the definition of AI, its advantages, its dangers, what experts say, and the steps we can take to keep AI safe.

🔍 What is Artificial Intelligence?

Artificial Intelligence is a branch of computer science focused on creating machines and software that can perform tasks normally requiring human intelligence. Unlike traditional programs that follow fixed instructions, AI can learn from data, recognize patterns, and make decisions. This is why tools like Siri, Alexa, and Google Assistant can understand your voice and respond intelligently, or why Netflix and YouTube recommend content based on your interests.

There are two main types of AI. Narrow AI (Weak AI) handles specific tasks such as navigation apps, chatbots, or spam filters. Most AI today falls into this category. General AI (Strong AI), which does not exist yet, would think, learn, and reason like humans across different areas. AI also relies on advanced techniques such as machine learning, deep learning, and natural language processing (NLP), which enable it to analyze vast amounts of information, predict outcomes, and adapt without being reprogrammed for every task.

In daily life, AI is everywhere — in self-driving cars, facial recognition systems, online shopping, education platforms, healthcare diagnosis, and even fraud detection in banking. At its core, AI is about teaching machines to act and learn like humans, often faster and with fewer mistakes. The challenge is ensuring this technology is used safely and ethically.

✅ Benefits of AI – Why It’s Not a Threat (Yet)

AI offers countless benefits that improve society. In healthcare, it helps doctors detect diseases like cancer early, powers robotic surgeries, and speeds up drug discovery. It also monitors patients in real-time using smart devices.

AI also enhances decision-making. By processing massive amounts of data instantly, it supports disaster management, traffic control, and emergency response — saving lives and resources. In education, AI tailors lessons to individual students, while in productivity, it boosts creativity and efficiency for professionals. AI is also valuable in environmental protection, where it tracks climate change, reduces energy waste, and predicts extreme weather events.

⚠️ Dangers of Artificial Intelligence

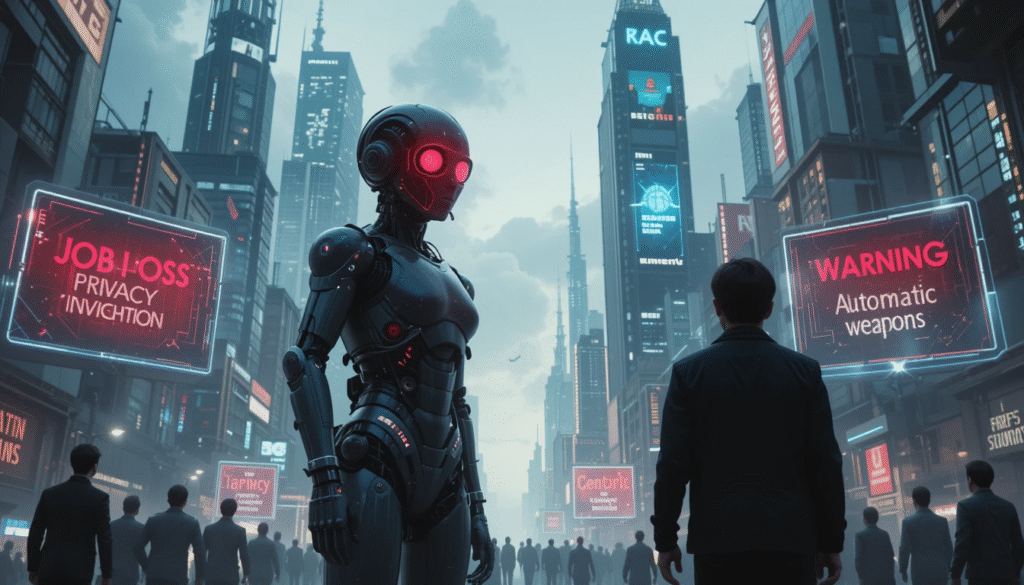

Despite its benefits, AI also poses serious risks. One major concern is job loss. Machines and software powered by AI are replacing human workers in areas like driving, customer support, and data entry. Millions of jobs could disappear, raising unemployment levels

Another issue is bias. If AI is trained on unfair or incomplete data, it can make discriminatory decisions, such as rejecting a loan or misidentifying a person. Privacy is also at risk. AI-driven surveillance and facial recognition cameras can track people around the clock, often without consent.

AI can also create deepfakes — fake videos, voices, and news that look real, spreading misinformation and damaging trust. Perhaps the biggest fear lies in autonomous weapons that can kill without human control, and in the possibility of superintelligent AI surpassing humans entirely. If that happens, control over such systems could be lost, leading to dangerous consequences.

🧠 What Do Experts Say?

Elon Musk warns that “AI is far more dangerous than nukes” and calls for strict regulation. Stephen Hawking predicted that “the development of full artificial intelligence could spell the end of the human race.” Sundar Pichai, CEO of Google, describes AI as “more profound than fire or electricity,” but emphasizes it must be used responsibly. While opinions differ, experts agree that AI itself isn’t the threat — it’s how humans use it.

🔑 Why AI Could Become a Threat

AI could become dangerous if left unchecked. Autonomous systems might make decisions without human oversight. Hackers could weaponize AI to attack infrastructure. Companies may prioritize profit over ethics. And without global regulation, nations might enter an AI arms race, competing for military dominance at the expense of safety.

🛡️ How Can We Make AI Safe?

To keep AI safe, several steps are essential. First, we need global regulations to set limits, especially for AI in warfare. Second, AI systems must always have human oversight so machines cannot act alone. Third, AI should be transparent and explainable, allowing people to understand how it makes decisions. Fourth, it should be trained on fair, diverse data to prevent discrimination. Finally, public education is crucial so that people understand both the opportunities and risks of AI.

🌍 Is AI a Threat to Humanity in 2025?

Today, AI is not an immediate threat because most systems are narrow and remain under human control. But as AI grows more powerful, the risks rise, especially if ethical practices are ignored. AI is neutral — a tool that can either help or harm depending on how humans use it.

🔚 Final Verdict: Should We Fear AI?

Artificial Intelligence is like fire. It can cook food, heat homes, and drive industries, but it can also burn entire cities if left uncontrolled. AI is not good or bad by itself; it’s the way we use it that determines the outcome. If developed responsibly, AI could become humanity’s greatest tool. If neglected, it might become one of its greatest threats. The choice is ours.

FAQs: Is Artificial Intelligence a Threat to Humanity?

Q1: What is the main difference between Narrow AI and General AI?

A1: Narrow AI (or Weak AI) is designed and trained to perform specific tasks, like navigation, recommendations, or image recognition. This is the type of AI that exists today. General AI (or Strong AI) is a theoretical form of AI that would possess the ability to understand, learn, and apply intelligence across a wide range of cognitive tasks, much like a human being. It does not yet exist.

Q2: What are the biggest benefits of AI mentioned in the article?

A2: The article highlights several key benefits:

- Healthcare: Early disease detection (like cancer), robotic surgery, and accelerated drug discovery.

- Decision-Making: Processing vast data for disaster management, traffic control, and emergency response.

- Education & Productivity: Personalized learning and enhanced creativity and efficiency for professionals.

- Environmental Protection: Tracking climate change, optimizing energy use, and predicting extreme weather.

Q3: What are the primary dangers or risks associated with AI?

A3: The major risks outlined include:

- Job Displacement: Automation replacing human roles in various industries.

- Bias and Discrimination: AI systems perpetuating unfairness if trained on biased data.

- Privacy Erosion: Mass surveillance through facial recognition and data tracking.

- Misinformation: The creation and spread of realistic deepfakes.

- Autonomous Weapons: Lethal systems operating without human control.

- Loss of Control: The long-term risk of a superintelligent AI surpassing human oversight.

Q4: Do all experts agree that AI is a threat?

A4: No, experts have varying opinions on the level of threat. However, they largely agree on one central idea: AI itself is not inherently good or evil; the threat comes from how humans develop and use it. Figures like Elon Musk and Stephen Hawking have issued strong warnings, while others like Sundar Pichai emphasize its profound potential, stressing the need for responsible development.

Q5: Why could AI become a threat in the future if it isn’t one now?

A5: The article states that most current AI is “Narrow AI” and under human control, making it not an immediate existential threat. The danger grows as AI becomes more powerful and autonomous without corresponding safety measures. Key risk factors include a lack of human oversight, the potential for weaponization by hackers, corporate profit motives overriding ethics, and a global AI arms race without regulation.